Deep Learning at Scale References

Checkout the following projects for Deep Learning at scale.

TensorFlowOnSpark

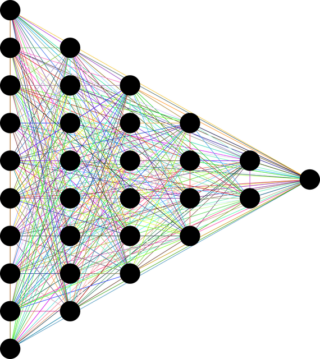

Developed by Yahoo, TensorFlowOnSpark brings scalable deep learning to Apache Hadoop and Apache Spark clusters. By combining salient features from deep learning framework TensorFlow and big-data frameworks Apache Spark and Apache Hadoop, TensorFlowOnSpark enables distributed deep learning on a cluster of GPU and CPU servers.

https://github.com/yahoo/TensorFlowOnSpark

BigDL: Distributed Deep Learning on Apache Spark

Another distributed deep learning library to directly run Spark programs directly on top of existing Spark or Hadoop clusters. Modeled after Torch, BigDL provides comprehensive support for deep learning, including numeric computing (via Tensor) and high level neural networks; in addition, users can load pre-trained Caffe or Torchmodels into Spark programs using BigDL.

https://github.com/intel-analytics/BigDL